|

|

|

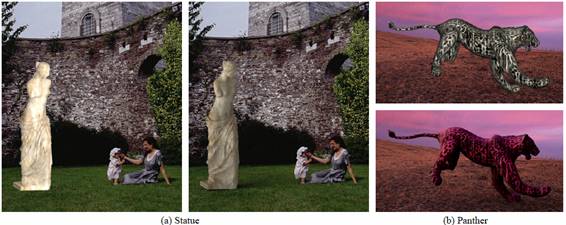

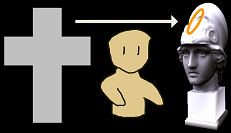

Figure 1: Image-based lighting is very useful, because it can represent complicated incoming light compactly as a cross image (left), and it can give us realistic rendering results (right). |

Figure 2: Environment maps are captured using a mirror ball and a high dynamic range photographs. However, environments that satisfy a designer's desire are not always available. |

|

|

|

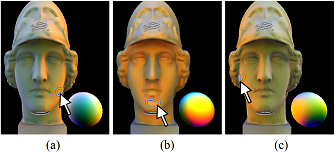

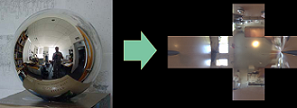

Figure 6: The recovered lighting environment is shown in the bottom-right sphere. |

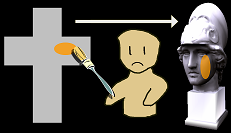

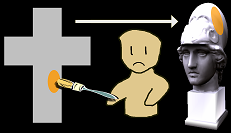

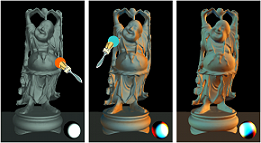

Figure 7: Orange highlights and white highlights are painted, and a rendered image from a different viewpoint is shown. The light source distribution estimated from the user input is visualized in the vertical cross. |

|

|

|

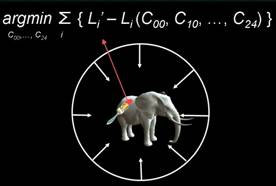

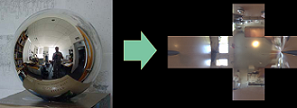

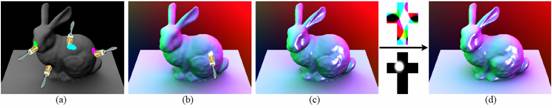

Figure 8: The user clicks and drags the white-lit part to rotate the lighting environment. The lighting environment rotates smoothly so that the surface colors under the mouse cursor stay constant. |

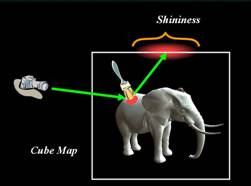

Figure 9: The user can also click and drag the shadow under the bunnyfs tail. |